Editor's note: The speed of change in the world today is almost catching up with science fiction. Self-driving cars are rapidly evolving. The satellites look for platinum ore on the surrounding asteroids. The AR game allows the dead to walk towards the park (scratching the elf)...

Whether these technologies can make us into heaven or fall into hell in the future may depend on whether we can improve the quality of human life for all people. The same is true in education.

Sinoustimes

In the field of artificial intelligence (AI), there is also such a company for the sake of all humanity education: Value Spring Technology, referred to as VST. They are inventing an artificial intelligence teacher named “Ali†who can use natural language to tutor any topic of interest to children of different ages around the world. The tool that enabled them to achieve this ultimate goal was the enterpriseMind platform they developed.

And almost all the other AI is different is that this platform can understand the meaning of language and rewrite their own program, perhaps really artificial intelligence generated from here.

To showcase the potential of EnterpriseMind platform and “Ali TAâ€, Ali’s first major project was to help students who coached the Enterprise in Space project to launch a 3D printed spacecraft into Earth orbit and complete the world. Over 100 student team experimental projects.

In the interview, the VST team introduced Ali's work process, how it differs from other existing AI software, and how it guides students through their entire learning career.

How does enterpriseMind work?

In 1984, William Doyle, founder of VST and inventor of enterprise Mind platform, registered his first AI software patent to mimic human thinking activities.

This AI patent is based on a "meaning engine" that can decode the meaning of words from non-structured sentences, writing software for the data engine and insurance underwriting fields. It can collect data on underwriting policies of various insurance companies and then, based on data analysis, writes procedures for company operations.

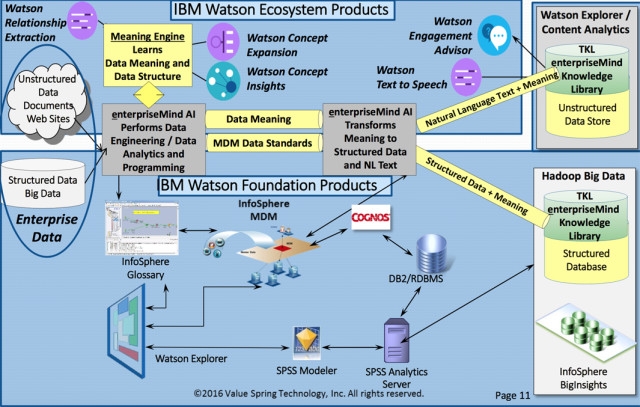

Thirty years later, after several generations of AI experts and IBM engineers worked tirelessly, Doyle's AI patent became the foundation of enterpriseMind platform and VST company, and eventually became the cognitive computing system technology that attracted IBM Watson. Now that Watson has started to develop AI cognitive computing languages, VST is also building the natural language interface of AI on enterpriseMind platform.

To understand how Doyle's AI works, we may need more psychology courses than computer courses because the enterpriseMind platform is based on actual human cognitive rules.

In psychology, the memory theory model has two ways, procedural memory and declarative memory.

Procedural memory contains information about how you do your work. If you are a data architect, procedural memory will tell you how to design a database. So, our AI stores procedural memory and knowledge.

Another kind of memory is declarative memory, which includes two kinds:

Situational memory (eg, events in life - getting up in the morning, drinking coffee, opening a conference call, etc.)

Semantic memory, including word concepts, sentences and stories.

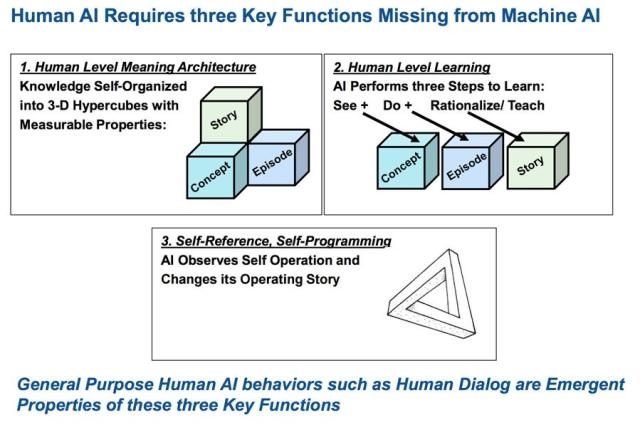

How VST's AI Uses Three Basic Meanings to Understand the Conceptual Meaning of Long Stories

Doyle added: "We believe that cognition depends on words, sentences, stories, and these elements interact to form procedural knowledge. Our artificial intelligence can now do procedural things, such as designing databases, writing software code, and It can also understand meaning, remember, learn, and classify customer data."

But how are these compiled into artificial intelligence algorithms? Although enterpriseMind is designed based on human cognitive principles, it is still necessary to convert these rules to computer science to some extent.

To make AI think like a human and perform a specific job, VST writes specific software for AI. For example, when designing a complex database, the program allows AI to follow the steps of the data engineer's database construction, which constitutes AI's procedural memory.

Doyle said: "We interviewed data engineers to understand how they work, for example, how to design a database? How to write code? How to integrate two databases? We collected this information and built our model for data engineers."

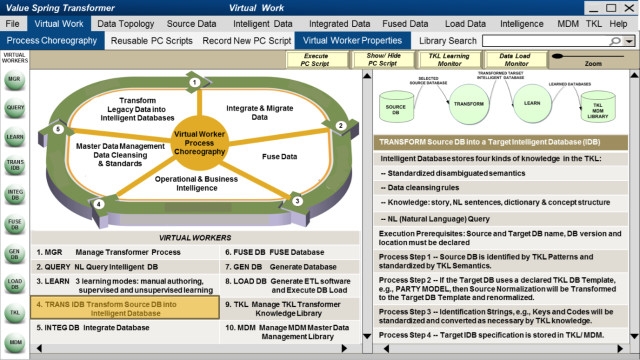

The figure shows the VST data engineer software interface, showing the interaction method between the data engineer and the AI.

129 kinds of meanings of "run"

The next important step is that AI needs to make a real response to the newly encountered data. VST's cognitive computing software can understand the relationship between words, sentences, and stories. This is the key to distinguishing VST technology from other artificial intelligence technologies.

Doyle explained: "The language people use is vague. The word 'run' looks very simple, but it also has 129 different meanings. The word 'party' also has 9 different meanings. On average, each There are at least 7 meanings of English words.This kind of ambiguity is often hidden in the mouth of our natural language users, because we don't actually feel the meaning of ambiguity.People eliminate the fuzzy meaning through the meaning of linguistic units such as sentences and phrases.We are It is based on this process to build enterpriseMind platform. If you can't artificially eliminate vague semantics, you won't be able to implement language functionality. "

When most AI relies on finding keywords in the content and then running the script based on the keywords, enterpriseMind can already guess what the content means.

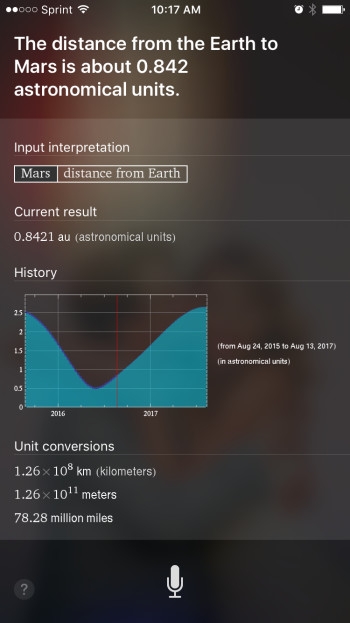

This is why when you ask Siri a question, she generally pushes search results related to question keywords. When the problem is still “What's the weather today?â€, this strategy will work, but when the problem is more complicated, such as “How far is the Earth from Mars?â€, this answer may not be correct.

Unlike Siri and Google Search, the artificial intelligence interpretation of VST is, "The distance between Earth and Mars is not fixed, and it is related to the rotation of the planet around the sun."

The general search results for this issue did not actually understand the meaning of "Mars," and they considered the "distance between Mars and the Earth" the same as "where is the nearest Starbucks?" However, we all know that Mars cannot be confused with Starbucks.

Allan Elkowitz, vice president of software development, explained in detail the differences between VST technology and other artificial intelligence on the market:

"The difference between us and other artificial intelligence that just reads a given script is that enterpriseMind understands the intrinsic meaning of text or language. A statement is actually a structured database with many columns. We can run this database in machine intelligence. Determine the meaning of each column.If you want to merge two insurance companies, they have a different database, and the two libraries have different names for the same thing, then we can translate between the two libraries and then synthesize them. A database."

Doyle used an article in the New York Times to discuss the 1993 World Trade Center bombings. By importing this article into the software, AI can divide the article into several related concepts, such as "explosion suspects" and "attack victims", by examining each word and their relationship with the article. Then AI The answer to "who is a suspect" can be found in the text.

Load knowledge for AI

Before AI can accomplish complex tasks such as database construction, insurance services, or education, we must provide programs with a great deal of corresponding knowledge. Take insurance, for example, the company's AI understand what is "insurance", "policy", "first-time loss notice", as known to the VST team, enterpriseMind is the only technology platform that can achieve this function.

Finally, AI can write its own software program. In other words, machine learning can be done. When new information is encountered, the platform can rewrite code to adapt to this new data, adding new rules and exceptions.

With the knowledge of the AI ​​understanding library and the engine that can decode and understand the meaning of information, coupled with the ability to self-learn and self-write programs, artificial intelligence can automatically complete complex human tasks in the future, such as processing large amounts of complex data. It can greatly liberate the computational work of data engineers and insurance actuaries, allowing them to focus on other important issues.

Currently, VST's artificial intelligence technology is being applied in the areas of insurance underwriting and risk analysis, creating and managing data models, and monitoring and managing infrastructure data. But to make AI a teaching assistant, VST also needs to add a natural language interface to the program so that teachers and students can talk to it like humans.

Teaching and language dynamics

EIS is a global online education institution that provides students around the world with free front-line teaching resources and courses. It is collaborating with VST to develop Ali, an artificial intelligence assistant teacher, to help teachers and students around the world teach and learn.

Doyle pointed out: "For artificial intelligence like Ali, the key to communicating with humans in natural language is that she needs to program for herself. There is no magic in human cognition. There is no magic in Ali, but only one. Different kinds of programming methods: Ali, an artificial intelligence that mimics autonomous humans, can change his own software program, learn knowledge, and have a situational experience when talking with humans... In short, she cannot predict time in dialogues and learning with humans. She can only write scripts, write software, and create dialogues for herself."

“Human conversation is dynamic, it is the spontaneous generation of sentences, making judgments and choices. Unlike other software programs, humans do not have fixed scripted dialogues. This is exactly the unique aspect of artificial intelligence that we have developed.â€

Ali will learn natural language through IBM Watson's various content analysis API application programming interfaces such as text-to-speech, speech-to-text, and WatsonSPSS machine learning. TSTy Holliday, a partner and COO of VST, said: "These APIs make Ali a cloud assistant. We use the tools of the IBM Watson platform to work on them and at the same time, we will also surpass them. We also work with IBM engineers to develop Cloud data centers so that students from all over the world can connect to Ali."

The figure shows how the VST engine can transform unstructured data into structured data in Watson.

Doyle said that about 80% of business analysis relies on the analysis of unstructured data

Teaching Ali how to be a teacher

In order to implement the education function of enterpriseMind platform, the VST team does not set the original database operation mode. What they will set is the mode that the teacher teaches students to learn.

In order to model the teaching process, the EIS educational institution has allowed Doyle and his knowledge engineers to take a 10-minute course of their selection of a wide range of professional trainers around the world. After learning that different teachers are teaching the same content to different students, Doyle believes that the VST team can make an effective model that reflects the process of knowledge exchange and the procedural tasks that should be carried out between teachers and students. Then, Ali can face the world, give students as teachers, continue to learn and continue to improve her program during the teaching process.

Elkowitz described this process as: "We learn the teaching content of the teacher and make it a template for success. When other questions are asked (this question may not yet have answers), Ali will follow this template process to think: There is a The question - the content of the question is this - I should know about this - where should I look for the answer - I found the answer - I gave the answer to the student. "

In addition, Ali will use other machine learning algorithms to process the user's appearance or sound data to provide the appropriate courses. According to different students' learning methods, find the most suitable way to meet student needs.

EIS project manager Alice Hoffman added: “Ali is of great importance to children with disabilities. Ali helps the church patiently to teach the same content to children with disabilities until they fully understand it. This is for blind and deaf children. It is very suitable."

Better AI thinking for better humans

VST will train Ali with the EIS in the next few weeks to become an assistant teacher. The contents of the teacher course will be input into the program to help Ali train. To see how Ali handles data, the VST team added a plug-in to the program that showed Ali's “knowledge mapâ€. From this map, we can visually see how Ali formed words and sentences. If the engineer finds Ali's mistake, he can also trace the source of the error through the map.

In fact, this map is a monitor of Ali's brain. If possible, we can also compare the human brain with Ali's brain. Doyle wants to use fMRIs technology to compare artificial intelligence with the human brain.

Jack Gallant, a psychologist at the University of California, has used fMIRs to demonstrate that humans increase blood volume in specific regions of the brain while listening to words. What Doyle wants to do is to use fMRIs to monitor the amount of blood in the brain when the teacher teaches and when students learn. Ideally, this data should help Ali build a better understanding of words, sentences, and stories and let Ali's brain work like a real human being.

If Ali eventually succeeds, it means that hundreds of millions of children around the world can have a private teacher in an environment without any educational infrastructure. If the Internet of the world is truly integrated before 2020, then these students can learn with Ali at the library or just using their parents' smart phones.

Of course, Ali also brings many benefits to students and teachers in non-virtual environments. The "Assistant" will liberate the teacher from solving endless problems. Students can choose the course content, course depth and teaching methods they like. Students do not need to carry heavy textbooks to read under the lamp. Everything is in Ali's program and is readily available.

Via engineering

Recommended reading:

IBM creates robotic assistants for teachers to fully optimize remote classroom experience

Sebastian Thrun, the founder of Udacity, an online education startup, talks about artificial intelligence and will not subvert education but help teachers

Molded Waterproofing Cable Assemblies

We specialize in waterproofing products overmolding. We can custom build, custom mold, and over-mold your cable designs.

We specialize in molded cable manufacturing for the widest diversity of

cable and connector types, across the whole spectrum of industries. Rich expeirence in developing and proposing solution Special for IP67, IP68 waterproofing products.

Molded waterproofing cable assemblies, waterproof wire harness, waterproofing cables overmolding

ETOP WIREHARNESS LIMITED , http://www.wireharness-assembling.com