Li Yanhong announced that Baidu will launch driverless cars within this year, and Tesla CEO recently said that "a driverless car is not a thing", the implication is that the era of driverlessness is coming. But in fact, unmanned driving is still in the early stages of its dreams. Google’s driverless cars have already been on the road and are still not commercially available. However, the Advanced Driver Assistance System (ADAS) does provide tangible benefits to drivers before they realize their dream of driving. ADAS is able to assist and supplement the driver during complex vehicle handling and ultimately achieve unmanned driving in the future.

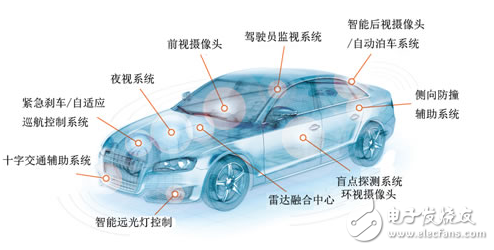

Advanced Driver Assistant System (ADAS) is a collection of various sensors installed in the vehicle. It collects environmental data inside and outside the vehicle at the first time to identify and detect static and dynamic objects. Technical processing such as tracking, which allows the driver to detect the dangers that may occur in the fastest time, to attract attention and improve the safety of active safety technology. The sensors used in ADAS mainly include cameras, radar, lasers and ultrasonics. They can detect light, heat, pressure or other variables used to monitor the state of the car. They are usually located in the front and rear bumpers, side mirrors, inside the steering column or in the wind. On the glass. Early ADAS technologies focused on passive alarms. When the vehicle detected a potential hazard, an alarm was issued to alert the driver to an abnormal vehicle or road condition. Active intervention is also common for the latest ADAS technologies.

Key technologies and applications of ADAS system

The two key technologies of ADAS are processors and sensors. Although ADAS applications are becoming more and more complex, as the performance of devices increases and the cost is reduced, the application of ADAS is spreading from luxury high-end cars to mid-range and low-end cars. For example, adaptive cruise control, blind spot monitoring, lane departure warning, night vision, lane keeping assist and collision warning systems, active ADAS systems with automatic steering and brake intervention capabilities have also begun to be applied in the wider market.

System: Lane Departure Alarm Sensor: Camera

When the vehicle leaves its lane or approaches the edge of the road, the system emits an audible alarm or an action alarm (either by a slight vibration of the steering wheel or the seat). These systems begin to function when the vehicle speed exceeds a certain threshold (eg, greater than 55 miles) and the vehicle does not turn on the turn signal. When the vehicle is traveling and its position relative to the lane markings indicates that the vehicle is likely to deviate from the lane, the lane markings need to be observed through the camera system. While these application requirements are similar for all vehicle manufacturers, each vendor uses a different approach, using a front view camera, a rear view camera, or a dual/stereo front view camera. For this reason, it is difficult to adopt a hardware architecture to meet a variety of different types of camera requirements. A flexible hardware architecture is required to provide different implementation choices.

System: Adaptive Cruise Control Sensor: Radar

In the past decade, luxury cars have adopted ACC (Adaptive Cruise Control) technology, which is currently being used in a wider market. Unlike traditional cruise control technology designed to keep the vehicle moving at a constant speed, ACC technology adapts the speed to traffic conditions. If it is too close to the vehicle, it will slow down and accelerate when the road conditions permit. To the upper limit. These systems are implemented using a radar mounted on the front of the vehicle. However, since the radar system cannot recognize the size and shape of a certain target, and its field of view is relatively narrow, the camera should be combined with the application. The difficulty is that the cameras and radar sensors currently in use are not equipped as standard. Therefore, a flexible hardware platform is required.

System: Traffic Sign Recognition Sensor: Camera

As its name suggests, the Traffic Sign Recognition (TSR) feature uses a forward camera combined with pattern recognition software to identify common traffic signs (speed limit, parking, U-turn, etc.). This feature alerts the driver to the traffic signs in front of them so that the driver can follow them. The TSR function reduces the possibility of drivers not complying with traffic regulations such as stop signs, and avoids illegal left-turn or unintentional other traffic violations, thereby improving safety. These systems require a flexible software platform to enhance the detection algorithm and adjust it based on traffic signs in different regions.

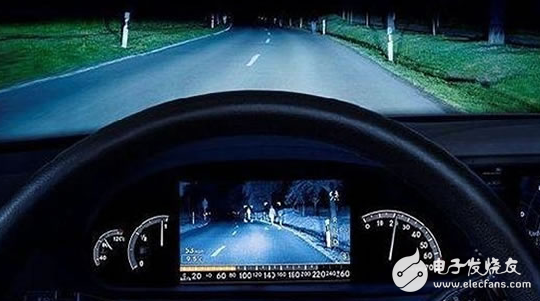

System: Night Vision Sensor: IR or Thermal Imaging Camera

The Night Vision (NV) system helps the driver identify objects in very dark conditions. These objects generally exceed the field of view of the headlights of the vehicle, so the NV system gives an early warning to the vehicles driving on the road ahead to help the driver avoid collisions. NV systems use a variety of camera sensors and displays, specifically related to the manufacturer, but generally fall into two basic types: active and passive. • The active system, also known as the near-IR system, combines a live coupled device (CCD) camera with an IR light source to present a black and white image on the display. The resolution of these systems is high and the image quality is very good. Its typical viewing range is 150 meters. These systems are able to see all the objects in the field of view of the camera (including those without heat radiation), but in the rain and snow environment, the efficiency is greatly reduced. • Passive systems do not use external light sources, but instead rely on thermal imaging cameras to capture images using natural thermal radiation from objects. These systems are not affected by the opposite headlights and are not affected by severe weather conditions, with detection ranges from 300 meters to 1000 meters. The disadvantage of these systems is that the image is granular and the function is limited to warmer weather conditions. Moreover, passive systems can only detect objects with thermal radiation. Passive systems combined with video analytics technology clearly show objects on the road ahead of the vehicle, such as pedestrians. In NV systems, there are a variety of architectural choices, each with its own advantages and disadvantages. To increase competitiveness, automakers should support a variety of camera sensors to implement these sensors on a versatile, flexible hardware platform.

System: Adaptive High Beam Control Sensor: Camera

Adaptive High Beam Control (AHBC) is a smart headlight control system that uses cameras to detect traffic conditions (opposite and inbound traffic conditions) and, depending on these conditions, brighten or dim the high beam. The AHBC system allows the driver to use the high beam at the maximum possible illumination distance without having to manually dim the headlights when other vehicles are present, without distracting the driver's attention and thus improving the safety of the vehicle. In some systems, it is even possible to separately control the headlights to dim one headlight while the other headlights are normally lit. AHBC complements forward camera systems such as LDW and TSR. These systems do not require a high-resolution camera, and if a vehicle has already adopted a front-view camera in an ADAS application, the price/performance ratio of this feature will be very high.

System: Pedestrian/obstacle/vehicle detection (PD) sensors: camera, radar, IR

Pedestrian (and obstacle and vehicle) detection (PD) systems rely entirely on camera sensors to gain insight into the surrounding environment, for example, using a single camera or using stereo cameras in more complex systems. The “category variables†(clothing, lighting, size, and distance) can vary widely, the background is complex and constantly changing, and the sensors are placed on mobile platforms (vehicles), making it difficult to determine the visual characteristics of pedestrians on the move, so The use of IR sensors can enhance the PD system. The radar can also enhance the vehicle detection system, which provides excellent distance measurement and excellent performance in harsh weather conditions, which can measure the speed of the vehicle. This complex system requires the use of data from multiple sensors simultaneously.

System: driver drowsiness alarm sensor: in-car IR camera

The sleepiness alarm system monitors the driver's face, measuring its head position, eyes (open/closed), and other similar alarm indications. The system will issue an alarm if it is determined that the driver is showing signs of going to sleep, or if it appears to be unconscious. Some systems also monitor heart rate and breathing. Ideas that have not been realized, including the ability to drive the vehicle closer to the roadside, end up stopping.

Brand Name : ONEREEL

Export Markets: Global

Mould Name : plastic spool mould

Shaping Mode :injection mould

Mould Size :According the sample size

Mould cavity: Single/multi

Runner system:Hot/cold

Mould steel choice: 45#, P20

Mould life: >600000 shots

Mould running: Full off automaticity

Product Size : as your required

Product description:

Product material:plastic

Delivery time : 30~60 days

Spool mould, plastic spool mould, reel mould, plastic reel moulds, cable reel mould, bobbin mould

NINGBO BEILUN TIAOYUE MACHINE CO., LTD. , https://www.spool-manufacturer.com